DeepTech will Shape the Next Decade

Semiconductor and hardware innovation are critical to the continued evolution of technology and the entire global economy. The software giants of our generation: Microsoft, Google, Facebook and Amazon all rely on semiconductors for their foundation. Historic funding to the bottom of the technology stack where hardware resides has been anemic over the past five years, while funding for software and software services’ investments has reigned supreme. Future software development will rely on new, custom forms of hardware. The emerging importance of custom hardware warrants examination by any dedicated or future tech investor. As we examine these momentous and undeniable shifts in the semiconductor space, we set out to explain the interrelationship between software innovation and hardware development to support our underlying conviction: the future of technology over the next decade is hinged on DeepTech.

A Decade of Software Dominance

For the past twenty years, software has won the battle for primacy in the technology space, earning the lion’s share of economic growth, valuation premia and venture investing focus. In August 2011, Marc Andreesen wrote his now famous Wall Street Journal opinion piece, saying “software will eat the world…” He has been proven right.

Over the past decade, software and outsourced cloud infrastructure have enabled affordable compute, storage, and the “as-a-service” consumption model. This, in turn, has driven successful innovation across broad swaths of our economy. LinkedIn has changed the way we connect professionally, Square and Venmo have evolved the way we pay our neighborhood vendors and friends. We have Spotify for music, Amazon for shopping, and Netflix for streaming television. These are but a few of cloud-based as-a-service winners that have grown to meet evolving consumer preferences

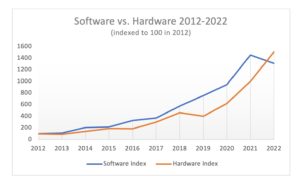

This prolific innovation in software and services is reflected in returns to investors. An equally-weighted basket of software stocks (Activision, Adobe, Amazon, Facebook, Microsoft, Netflix, PayPal, Salesforce, ServiceNow, and Splunk) yielded a tremendous 35% annual return, or almost 15x aggregate, from 2012-2020.

For the same period of 2012 to 2020, a basket of hardware stocks (AMD, Analog Devices, Broadcom, HP, Intel, Micron, Nvidia, Qualcomm, Texas Instruments, and TSMC) yielded 29% per annum, or 10x aggregate. Then something dramatic occurred in 2021 – the hardware stocks continued their trend, trading up 26%, while the software stocks declined 8% – this is a 34% outperformance! Perhaps “eating the world” is causing a bit of indigestion?

We have reached a turning point. The market has begun to recognize that the hardware development cycle must accelerate to enable further software led innovation. Software did indeed eat the world and innovated at levels anticipated by few; however, further gains will require hardware innovation as a new foundation.

Moore’s Law and the Software Ecosystem

For fifty years, Moore’s Law has driven improvement in the underlying computational and storage hardware (“semiconductor compute” or “compute”). Moore’s Law consistently has provided 50% more compute every two years at 35% less cost. Think about your own personal computer upgrades. Predictably getting 50% more compute at dramatically less cost allows for amazing performance improvements.

History Computer demonstrates the impact of scaling in its November 14, 2021 article “The Complete Guide to Moore’s Law,”

“In 1970, a chip containing 2,000 transistors cost around $1,000. In 1972, you could buy that same chip for about $500, and in 1974, its price went down to about $250. In 1990, the price of that same amount of transistors was only $0.97, and today, the cost is under $0.02.”

This hardware progress has been the foundation of development for the entire technology ecosystem, and arguably for the entire US economy. By packing more transistors per square millimeter, the average cost per chip goes down, generating massive scaling economics. Software-oriented development is predicated upon these improvements in the underlying compute. Said differently, compute provides the foundation for software.

This has transformed the way people ‘compute’. In living memory, a computer meant an IBM mainframe. These were displaced by personal computers, then mobile phones and tablets, and, eventually, watches. All of these changes were made possible by the scaling effects of semiconductors. Each change, in turn, created a massive new ecosystem of devices and software driven applications. We are now at the cusp of a new hardware-driven cycle of innovation.

Predictable Moore’s Law scaling allowed step function improvements in compute capability. These step function improvements in compute capability provided software developers several orders of magnitude improvement in the flexibility and freedom available for software innovation. Software developers could customize functionality with software to meet the growing needs of an expanding user base, ultimately bringing the availability of “computers” to every person on the planet through a smartphone. Ironically, this meant there was little economic incentive to specialize the hardware, given its longer development cycles and higher cost. General purpose compute capabilities were more than adequate for the software-defined ecosystem. This general-purpose compute and custom software model became the norm for the past decade.

Challenges to Moore’s Law

Unfortunately, Moore’s Law is now fundamentally challenged, and the model of predictable compute scaling is forever changed. Physics is catching up with improvements in transistor density, causing chip development to take longer and cost substantially more. As examples, Intel has taken over five years to progress between each of its last two nodes (10nm and 7nm), Global Foundries exited pursuit of leading-edge chip development in 2017, and industry leader TSMC is delivering only 15-20% transistor improvement every two years. The cost of new semiconductor manufacturing has also skyrocketed, with the cost of a new fab exceeding $20 billon. Fabs with the same output in 2015 (on older nodes) cost approximately $5 billion. Pursuing Moore’s Law is a chase toward diminishing returns. In his seminal paper, Gordon Moore predicted that economics would eventually limit transistor density, predicting exponential cost increases to build manufacturing capacity.

The slowing of Moore’s Law will have a dramatic impact on the entire technology ecosystem. No longer can software developers rely on massive step function increases in general purpose compute to innovate with software and services capabilities to meet emerging and latent customer needs. Improvement through more complex software without underlying improvement in existing semiconductor hardware will likely bloat the existing processors, yielding lower incremental performance. Substantial performance improvements must be delivered by new, custom hardware models.

As proof of this trend, Apple has made a dramatic move to develop its own custom modem and CPU’s for its phone, tablet and laptop products. Apple thrives on seamless hardware and software integration to deliver a compelling consumer proposition. This is best delivered with custom, in-house chips. The major software companies are similarly developing custom compute products. They are using proprietary knowledge of their compute workloads to develop semiconductor products that will drive cutting edge integrated software and service innovation. Microsoft is developing networking compute and graphics capabilities, Amazon acquired Annapurna to lead in the reconfiguration of its data center network, and Google has been very active in developing AI hardware and data center networking capability.

While Moore’s Law may have slowed, consumer expectations conditioned by a decade of software-led innovation and an insatiable appetite for new technology have not. These expectations create opportunities for new technologies and new, compelling consumer propositions. This diversity of new workloads reinforces the need for more special purpose hardware. This trifecta (slowing Moore’s Law, new consumer expectations, and emerging hardware) is creating a pivot point of opportunity that is reliant on purpose-built, custom compute.

Future gains in productivity and capability will require new, more efficient forms of compute. Specialized or custom compute is the current focus of massive research and development spending. With general purpose compute gains currently stalled, development of custom compute for specific applications or workloads allows more efficient functionality on a smaller footprint of silicon required for a given purpose. Artificial intelligence chips are an example of very specialized compute to create a neural network that can recognize data or images and characterize that data to make predictions. Custom hardware-software co-design will the norm for special purpose solutions like AI. These chips are orders of magnitude more powerful than general purpose chips because of this co-design specialization.

The Semiconductor Supply Shortage

The challenges of a slowed Moore’s Law have also contributed to the chip “supply shortage” that we have heard so much about in the media. Much of the blame for this has been placed upon the global supply chain. While this is a contributing factor, it is only part of a larger and deeper problem semiconductor manufacturers and their customers.

The semiconductor companies, especially Intel, did a poor job of planning for the slowdown in Moore’s Law. The flip side of increased transistor scaling/density is a decreased need for new factory/fab space. When you are shrinking the size of compute every two years, you are also effectively doubling manufacturing capacity. When the “shrink” stops, the capacity increases stop, and you need to spend more capital (“capex”) to sustain market growth. Manufacturers have known Moore’s Law scaling was becoming more difficult and the costs of achieving it were skyrocketing. They waited until very late in the planning cycle before adding capacity to supplement the slowdown in Moore’s Law. TSMC announced a new $20 billion US fab in Arizona in 2020. Samsung announced a large expansion to its Austin, Texas fab in 2020. Under new management, Intel has committed to increase capacity on its current technologies (in semiconductor speak “nodes”). In the past three months, Intel has announced a $20 billion two fab complex in Ohio and a $27 billion new fab in Germany. These fabs will add much needed capacity to make up for the slowdown of Moore’s Law.

This supply constraint has been coupled with a massive, COVID-driven demand spike for networking compute. The COVID pandemic spiked demand for personal computers, streaming entertainment sources, virtual office connections, and Zoom calls. All of this created a mammoth increase in networking and data center compute. Consider how much the quality of a video call has improved in the past two years. This significant shift in the demand curve has caused price-conscious consumers of compute, like the auto industry, to suffer.

So, this is not just a supply chain issue. Yes, difficulty in sourcing raw materials from China is a problem, but poor long-range planning and a massive shift in the demand curve have created a perfect storm that industry pundits have labeled a “semiconductor supply shortage.”

How does custom compute impact the supply shortage at the semiconductor manufacturers? Specialized compute requires less silicon area than large general-purpose compute. Developing specialized functionality at the silicon level will increase productivity for the semiconductor manufacturers. That means that manufacturers can produce more processors while remaining within their existing footprint. Large, monolithic CPUs and GPUs will be overtaken by specialized AI, computer vision, smart sensors, and lower-power hardware. These specialized chips are smaller and more efficient to manufacture than a monolithic, general-purpose CPU or GPU chip. The manufacturers have a huge economic incentive to facilitate this change, as it will increase yields and profitability toward historic levels. More complex chips take more area and, without Moore’s law scaling, produce lower profits.

Opportunities in DeepTech

Outside of semiconductors, we believe that there are similar opportunities in other technology areas. Innovations in battery technology have allowed new technologies like the electric vehicle. Improved digital vision sensors have driven digital SLR cameras into obsolescence. Display technologies are providing both improved resolution and larger television displays while simultaneously allowing videos on a watch face. Computer vision and new sensor technologies will allow a world of automation that we have only begun to imagine. Many of these technologies will enable unprecedented levels of innovation in areas like autonomous driving.

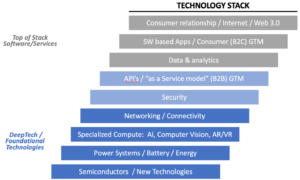

We define our opportunity set based upon the technology stack. Think of the technology stack as a staircase, with basic foundational technologies at the bottom and consumer-facing, software-driven applications at the top. Each step is built upon the steps beneath it.

Semiconductors and power systems are at the bottom as foundational technologies. Next up the stack are specialized compute platforms like computer vision, voice recognition and artificial intelligence. Up from there are networking and communication capabilities that allow the systems to accumulate data and to work together. These systems then need a layer of security to protect against malicious actors. You then have an API level that allows for compelling and diverse go-to-market propositions. This API level down to the foundational technologies at the bottom of the stack are what we define as DeepTech (blue in the chart above). Above this level are “top-of-the-stack” software and services opportunities such as data and analytics, software apps, direct-to-consumer interfaces and the Web 3.0.

It is true that hardware-driven development cycles and innovation take more time and complexity to achieve results (relative to software and top-of-the-stack innovation). But software development is not viable without the underlying DeepTech innovation. Custom DeepTech innovation is now essential because, with the decay of Moore’s Law, manufacturers aren’t delivering better new platforms consistently. Top of the stack software cannot reach its full potential without improvement in bottom-of-the-stack technologies. The best new technologies will have custom software designed for new custom hardware.

We believe that DeepTech led innovation will drive new products and services in facial recognition, voice recognition, low-power edge compute, artificial intelligence, 5G two-way connectivity, new low-earth satellite communications, improved memory/storage capabilities, micro-LED displays, solid state Lidar and Radar, embedded security, and ultra-low power processors, to name just a few. These areas all sit at the frontier of new DeepTech development and do not rely solely on Moore’s Law. They will enable products and new value propositions to meet evolving consumer expectations with better custom performance.

As these new technologies come to market, we expect that the consumer model will evolve as well. For instance, the market expects 5 billion new connected devices in the next five years. This proliferation of connected devices will create an explosion of new data. These new massive data streams will require (1) more efficient processing, transfer and storage solutions, and (2) new models of analytics and to convert the raw, unstructured data for business consumption. The new technologies will also create demand beyond just compute. They will change the market for batteries that power the new devices, for networking that connect the devices and for how we store and access the new data. This data, in turn, will allow prediction of future consumer behavior and trends. The hardware-driven cycle will self-perpetuate. We expect similar self-reinforcing new markets in areas such as: autonomous vehicles, a re-designed data center/cloud infrastructure, new sensors and compute at the edge of the network and in industry 4.0.

Perhaps the best example of this new hardware-driven innovation is the much discussed “metaverse”. The metaverse is predicated upon new, immersive forms of compute that combine heads-up displays, new generations of sensors that allow hands-free interaction, real-time voice and image recognition, predictive analytics and AI; all packed into a form factor that can be wearable and not generate uncomfortable levels of heat. Combining these technologies into a seamless user experience will require substantial specialization, not large and cumbersome general-purpose functionality.

We are discovering many exciting, new business models being developed on top of new hardware technologies. My partners at Snowcloud and I have recently been introduced to a start-up developing a unique AI as a service model, to a company that is using software to minimize the data required to train an AI chip, and to a company reinventing battery chemistry to be less reliant on lithium-ion technology. We are seeing computer vision optimization where only the pixels that change frame to frame are processed. Low power sensors are being developed to monitor environmental parameters necessary for emerging biological pharmaceuticals. Companies are reimagining the design and performance of hardware. The future of DeepTech driven innovation is bright, and these hardware changes will drive new ecosystems for data, software, and services development. We are very excited for a post-Moore’s Law world.

ESG and DeepTech

Addressing social and governance issues, collectively known as ESG, has become the defining public policy agenda of our time. Not obvious to many are the myriad ways in which DeepTech ecosystems will drive the ESG changes that society is demanding. DeepTech innovations impact infrastructure, power consumption, climate change systems, the intersection of biology and engineering, and sustainability among others. New edge of network sensors will monitor the environment and emissions, more energy efficient processors will be deployed in massive data centers of the future, and specialized AI compute will revolutionize our ability to understand and predict the efficacy of new ESG models. The technologies and services classified as DeepTech should be a driving force behind the innovations that supports society’s ESG evolution in their entirety.

Conclusion: Why DeepTech is Compelling Today

Software and top-of-the-stack investing remains an attractive investment opportunity. Market forecasts call for the $1.5T annual software and services market to grow by 33% to $2.0 trillion over the next five years. This growth will generate strong investment returns for the winners in the space. Finding those winners will be more competitive, with almost $800 billion of venture money invested against top-of-the-stack opportunities over the past five years. That is $800 billion chasing a pool of $500 billion of incremental annual revenue. Perhaps the public markets are right and private market software valuations are due for a correction.

We know that software can only go as far as the compute upon which it runs. Any technology investor must thoughtfully consider the inherent risks of staying in the top of the stack and depriving a tech portfolio of needed DeepTech diversification based on empirical evidence stated above. Market forecasts for DeepTech estimate that the current $700 billion annual revenue will double to $1.5 trillion in five years. Only $70 billion or 10% of venture money has been invested against DeepTech opportunities over the past five years. This supply/demand imbalance creates a real opportunity. We prefer to invest in a market where $70 billion leads to $700 billion, not where $800 billion is chasing $500 billion.